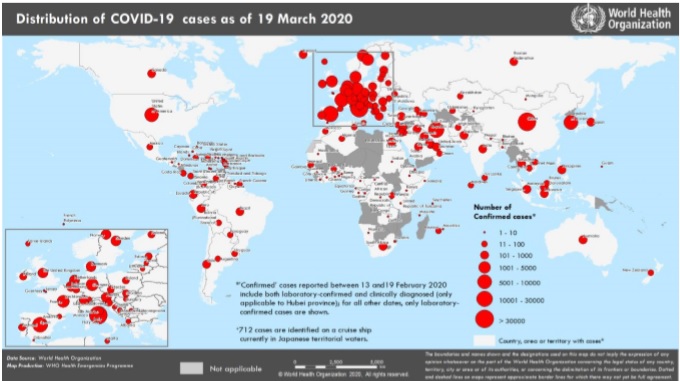

We are living in unprecedented times in 2020. Just as we ushered in a new decade with optimism, the world was gripped with a virus spreading its tentacles and distributing across the entire globe. This was just another crisis for me having already seen the mayhem caused by dot-com crisis, 9/11 attack, SARS epidemic, 2008 financial crisis and H1N1 swine flu in my career. However alarming was the rapid distributed nature of the crisis caused by the coronavirus contagion which engulfed nearly all the countries of the world after having its first epicenter at Wuhan city in China. At alarming levels this was declared as a pandemic by World Health Organization on March 11th of this year.

However this crisis was not something that was completely un-anticipated. In fact some of the world’s best thought leaders and ambassadors like Bill Gates who had been warning the world for the last few years of the next big crisis caused by an epidemic rather than a nuclear war.

The next outbreak? We’re not ready | Bill Gates

Ted Talk 2015 by Bill Gates Source: YouTube

Unfortunately, the warnings were not taken seriously by the authorities and the world leaders. Infact there were regressive measures taken like the disbanding of the Office of Pandemics and drastically reducing budgets allocated for agencies like CDC. Even the World Health Organization is seeing funding cuts from donor nations and ramp down in budget allocation. In an era where we hear buzzwords like “digital transformation” and “data is oil”, it is astonishing that important decisions like healthcare budget cuts made are not data driven. The billions in budget cut are now going to cost the global economy trillions of dollars.

The pandemic had clearly shown that our healthcare systems are not designed or architected for this kind of distributed crisis. A lot of myths and biases about robustness of healthcare systems in developed nations had also been broken by the pandemic. The swiftness of its spread in this hyper connected globalized world had taken us by a surprise.

As the world gets ready to fight this new world war against the unseen enemy, there is a need for us to win as many battles as possible. It is important for us to convert the weaknesses in our current systems into our strength. Technology and data can be leveraged to make a difference to the current situation.

Some important points on how technology can help the healthcare system at this moment:-

Scalability of Testing:

We all have been hearing from epidemiology experts the secret to fight this pandemic….. Tests, Tests and Tests ! However the availability of the testing center and the number of tests has to scale in exponential proportion to make this possible. A traditional and centralized model of test data input, processing and test result output can only scale linearly. It is limited by design.

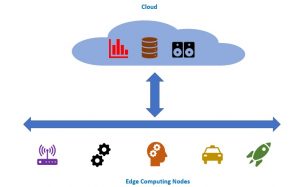

This is where using distributed solution capability can help organizations to achieve elastic scaling of their testing capabilities exponentially and have quicker turnaround time for test result output. Distributed Computing using the capabilities of edge, cloud and datacenters with high speed connectivity can make this elastic scaling of testing possible.

This can provide the scalability in a pandemic scenario by allowing smaller clinical pathology labs to collaborate with larger central laboratories. By interfacing laboratory equipments with the distributed cloud network, the pathologists can finalize reports from any remote locations globally. It also helps patients to easily access their reports online without exposing themselves to visit the lab.

Augmentation of Testing:

Some of the countries in forefront of the war against the virus realized early on that there would be need for augmenting testing methods and systems. One of the country South Korea used artificial intelligence to augment the regular testing methodologies. Terenz Corp, a South Korean company works on AI-based decision support system for critical healthcare diseases to improve the quality of life of the people by detecting the high-risk diseases at an early stage and monitoring them with the Terenz platform. To detect Covid-19 in minutes, Terenz developed AI-based screening system that can detect the COVID-19 using chest X rays within a few seconds with an accuracy of 98.14%.

For detecting COVID-19 and classifying the data into Normal, Pneumonia and COVID-19, an AI engine leveraging Convolutional Neural Network was developed. The CNN algorithm developed performed pretty well by plotting an overall accuracy of 98.141%. Read more about the solution from Terenz over here.

Extensive use of Data Analytics:

Taiwan, leveraged its national health insurance database and integrated it with its immigration and customs database to begin the creation of big data for analytics; it generated real-time alerts for a clinical visit based on travel history and clinical symptoms to aid case identification. Even though the country was only 81 miles off the coast of mainland China it was not as hard hit as other nations in the region as decisions were made based on historical data systems architected after the SARS epidemic. Taiwan merged the national health insurance database with its immigration and customs database. By merging databases they could collect information on every citizen’s 14-day travel history and ask those who visited high-risk areas to self-isolate.

Looking back into historical pandemic data can assist in making lot of important decisions and also in predictive analytics. In the current scenario due to the high infection rate the contagion is compared with historical data from the 1918 Spanish Flu, which caused 39 million deaths wiping out 2% of global human population. Important lessons can be learnt from the 1918 pandemic in terms of macroeconomics, progressions of the virus and mortality numbers.

Even though the Spanish Flu started from China then spread to Europe, North America and South Asia the highest mortality rates were seen in British India. The highest rate by far was for British India, cumulating to 5.2% fatality during the pandemic. China’s death rate was not nearly as high, but because of its large population, it contributed significantly to the number of global deaths. The US had a cumulative death rate of 0.5%, with an associated number of deaths of 550,000.

Deep learning using neural networks can be trained using the datasets from Spanish flu, SARS and Covid-19 for training of various predictive models for better decision making. This actionable intelligence can make a difference in decision making to nations like India which had suffered the most in a similar novel viral outbreak a hundred years back.

Secure Data Exchange:

This war would require the coordinated sharing of data between various entities (agencies, states, countries, global bodies and private sector). One of the biggest hurdles in the healthcare industry is sharing of data as it is highly regulated with compliance laws like HIPAA, Hitech Act, MACRA, GDPR and Chain of Custody. The data would be required for quick test bedding, development of vaccines, track herd immunity, clinical trials and critical decision making. Processes for data lifecycle can be automated via RPA(Robotic Process Automation) mechanism. The data can be used to develop applications like Contact Tracing App as developed by Govtech from Singapore. It can be the apps and data labyrinth. The consistency of the application workloads and support for various data or file formats will be critical for stable input and output systems.

Also it is necessary that the data systems are high available for access 24*7 across distributed research locations. Disruption in availability of data systems and failure to comply with service level agreements(SLA) can seriously hamper the progress of rapid medical research and innovation. Technologies like Big Data Cluster from Microsoft Corp or any open source system can be the molecular component of such healthcare data system.

It is equally important to make sure the data exchange systems are secure with encryption at various levels and multi layer security enabled. For remote workers VDI (virtual desktop infrastructure) could be a mechanism to access the data systems. This will make organization like WHO and other similar agencies as a secure data gatekeeper.

Optimization of Supply Chain Management:

The healthcare staff and hospitals, treating covid-19 are facing severe shortage of personal protective equipment and ventilators. The healthcare systems were not planned for a pandemic of this proportion.

OEM’s and other companies are rushing to make sure that their assembly lines are running at full throttle. However complex designs of medical equipment’s consists of multiple components from numerous vendor sources. Industry 4.0 ensures that supply chain function provides integrated operations from suppliers to end healthcare consumers. This is so critical for the delivery of vaccines to all 9 billion humans residing all over the planet so that vaccines can be safely transported at the earliest to the distant corners of the planet using the cold chain storage capability.

With effective implementation of automation with 3d-printing, IOT, blockchain and AI, the supply chain for Industry 4.0 can achieve its key performance indicators to roll out the essential medical equipments and gear at a faster pace than ever before. Real time planning gives more flexibility in this situation and accurate performance management makes sure that the information reaches from high level KPI’s to granular process endpoints like the real time location of a component. The data from the various sources can also help the trade department or agency of the governments to make sure that procurement of components can happen to enable local production near the virus epicenters. Blockchain can be effectively implemented to make sure the supply chain data veracity is maintained. Startups like PencilData are making sure data veracity is maintained while securing from invisible cyber attacks on supply chain data.

This could be a long war going forward but if there is a coordinated response globally with effective use of technology systems then it could tilt the war towards us. We definitely have the smart technology systems of the 21st century as weapons in our arsenal to gain victory over the deadly invisible enemy !

DISCLAIMER: The information in this document is not a commitment, promise or legal obligation to deliver any material, code or functionality. This document is provided without a warranty of any kind, either express or implied, including but not limited to, the implied warranties of merchantability, fitness for a particular purpose, or non-infringement. This document is for informational purposes and may not be incorporated into a contract. Drootoo assumes no responsibility for errors or omissions in this document. Contact us at [email protected] for more information on the topic.

(The blog will be updated regularly during this challenging period.)

About organizations mentioned in the article:

Drootoo is a Singapore HQed company specializing in distributed cloud systems.

McKinsey is a global consulting company.

Pencil Data is a Silicon Valley based company specializing in data security.

Terenz Corp is a South Korean company specializing in AI based decison support system for critical healthcare diseases.

Microsoft Corp (MSFT) is a global leader in software, data and cloud systems.

World Health Organization (WHO) is the leading global health body.

Center For Disease Control (CDC) is the agency in charge of epidemics under United States Government.

Taiwan CDC is the agency in charge of epidemics for Republic Of Taiwan.

Govtech is the agency in charge of government technology for Republic of Singapore.